We can say that 'quality' means meeting requirements, and 'assurance' is proving that we've met those requirements. Today, let's look at where requirements come from - the client, the user, standards specifications, and your team. And then: testing comes in all shapes and sizes. Today we'll also look at why we test, how we design our tests, what all the different types of testing are, and how they fit into a team's lifecycle.

Table of Contents

- Client Requirements

- User Requirements

- Beyond the ask: Security & Standards.

- Team Requirements

- Why do we test?

- What do we test?

- How do we test?

- Summary

What is 'Quality'?

When making any kind of product, quality is defined by meeting requirements.

In web development, requirements come from a variety of sources:

- Client requirements

- What the client wants.

- User requirements

- What the user needs.

- Standards

- What the language specification says.

- Team requirements

- What the team wants.

Client requirements

... are strange and arcane. They are a chimera that may elude you long after launch, shimmering like C-beams in the dark near the Tannhäuser Gate.

Hopefully you have a manager who can translate client requirements into technical requirements, so that requirements like, "brand synergy" become actionable items, like "team photos on the 'About Us' page".

Devs are often a degree removed from clients, so that client requirements get translated through content and design teams. However, if you're freelancing or working in a smaller shop, or if your team doesn't do UI prototyping as part of the design phase, you'll need to parse client requirements.

When your code is ready for a "release", there is usually a phase of testing called "user acceptance testing". This may involve actual users, but most often this is "client acceptance", meaning the client gets to test the release.

Client acceptance is when you're really relieved that you documented the client requirements at the beginning, and had them sign off on the proposed designs. Client acceptance is the phase in which the client changes their mind, or introduces new requirements. Since those changes weren't captured in the initial requirements, you get to move deadlines and charge them for the extra work.

Client requirements are arguably the most important requirements outside of accessibility. Meeting client requirements is what gets you paid and lets you go home at 5pm.

User requirements

User requirements are anything a user needs to accomplish their goals while using your application. In a perfect world, you could sit down with one user and just ask what they needed.

The process of generating these requirements, however, is more complex.

User requirements come from user testing. This could come from observing users (usability testing), interviewing users (user experience testing), or digital tracking of user behaviour (analytics).

Beyond the ask: Security & Standards.

You're going to learn about actively collecting user and client requirements in various other courses, so it won't be something we spend too much time on here.

There are things, however, that are required by both the client and the user that they shouldn't have to (and most likely won't think to) ask for.

- Security

- Keeping user data from unauthorized access, making sure the application keeps working, and keeping the application free from unauthorized changes are requirements often left unspoken by clients and users alike, but they are the responsibility of the people engineering the application.

- Standards

- Sticking to language specifications, like those created by WHATWG & the W3C Opens in a new window (HTML, CSS & accessibility) and ECMA International Opens in a new window (JavaScript), mean that your application is accessible for people with disabilities, and future-proofed to work on devices that haven't even been conceived of yet.

Team Requirements

There's a term I've started seeing thrown around lately that I love: 'DX'. It stands for Developer Experience.

I've heard it in the context of different frameworks (i.e. "Svelte has great DX compared to React"), and it's the reason we have Team Requirements.

Team requirements might be things like code naming conventions, formatting, commenting conventions, version control workflow, design patterns, testing strategies, etc.

If you don't know what some (or any!) of those things are - that's okay! We'll be talking about them all in this class.

All you need to know at this point is that every high-functioning team, big or small, has at least some agreed-upon patterns when writing, storing and/or deploying their code.

The patterns themselves may vary, but what's important is that the team agrees. This works on the same principle that allows standards-compliant code to run on different devices: predictability. If I know how you're going to write your code, I can predict how I can write mine so it "plays nice together". It also allows me to easily read and understand your code (or, you know, my own code that I wrote 6 months ago).

The purpose of team requirements is to improve the Developer Experience, making collaboration, enhancement and maintenance quick and easy.

In summary...

...we can say that in the world of web development, "quality" is satisfying the client, the user, the standards, and our team.

What is 'Assurance'?

Assurance is the process by which we create confidence.

It sounds like 'insurance', and that's not a coincidence! Insurance is a process that's in place that happens in case something unexpected happens. Assurance is a process that's in place to make sure unexpected things don't happen.

Why do we test?

We test so we can forget about requirements, so we can track down bugs, and so we can prove our work.

Testing to forget

When dealing with web applications, we take requirements from a multitude of sources (including, but not limited to: the user, the client, our teams, web standards). We do our best to translate these requirements into an application built with a variety of interoperable languages.

I have an enduring respect for servers Opens in a new window at restaurants. Many servers can remember a multitude of complicated orders, coupled with the identity of the orderer, and efficiently communicate with the kitchen, all before any actual serving happens.

Most, however, will offload memory read/write tasks, providing transferability and traceability through non-volatile data storage.

The idea here is, the more complicated a task, the greater the need to capture those complications and put them somewhere that doesn't rely on our memory.

Automated testing, along with manual testing responsibilities documented and distributed across our team, allow us to capture requirements so that we can forget about them without failing to meet them.

Testing to track

How long have you spent tracking down an errant semicolon? After a decade of web programming, I'm sure I've accrued days of lost productivity. Errors are inevitable, but there are circumstances that are force-multipliers when it comes to mysteriousness.

Application complexity

What's breaking that layout? The CSS? In which file? Is the style coming from a Javascript function? Is it in that 3rd-party library? Or is it the browser defaults?

The more you have influencing your application, the longer you have to wear your deerstalker cap and puff on your pipe.

This is why we both test the parts of an application individually, and the application as a whole. Testing individually allows us to find bugs in a contained space before they reach the rest of the application. Testing the whole allows us to capture bugs that emerge from clashes between the parts.

Application maturity

We test early, and we test often.

Catching a bug while you're coding is a healthy part of everyday life. Catching a bug in production will make your manager call you at 1am on a Tuesday.

The longer it takes to catch an error, the more costly a bug becomes (and the more people you have to explain yourself to).

Re-use

The DRY principle in programming is tremendously useful in programming, and is definitely to be encouraged. It must be understood, however, that it is making a trade-off when it comes to bugs: code reuse means bug reuse. The impact of an error in code that is widely used will be... wide.

Now, the obvious benefit of DRY programming is that fixing bugs is quick - fix it once and it's fixed everywhere, no tracking down every instance of an error that was manually copied and pasted into independant pages. The cost of this efficiency is to respect the impact of code re-use. We test early to mitigate the risk of a widely-distributed bug, and we test robustly to account for the variety of contexts in which our code may be used.

By testing robustly, we take into consideration the variety of inputs and outputs our code may be called upon to accomodate, and the various platforms and user-agents our code may encounter.

Testing to prove

Arguing with people sucks. Except when it's fun.

I've had the good fortune to have some fun arguments with other developers on my team about code. What made those arguments fun is that we would always end up testing what it was we were arguing about - page load speeds, compression sizes, the ability of various code editors to load >1GB-sized files, etc. etc. And they'd always end with someone saying, "Hunh - guess I owe you a coke."

Common advice for writing a resume is to list your accomplishments, not just your responsibilities. Creating metrics will generate some feathers for your cap, and can help you advocate for preferred solutions for your team. But the best outcome of testing, in my view, is to minimize guesswork, ego and conflict in the workplace by generating proofs by objectively testing assumptions.

What do we test?

Coding errors happen every day. If we're lucky, the error means our code doesn't make sense to the computer. In that case, we get near instantaneous feedback in the form of an error message.

❌❌❌ ERR6401: You're welcome!However, if you want to test not only whether the computer understands you, but also whether you're explaining your intentions properly, you're going to have to create your own error conditions. This will require some critical thinking.

A test is a question, and the simplest questions are "True or False". If you can break your assumptions down into binary, you'll greatly simplify the process.

Some things can be very easy to test in an automated way. Others essentially impossible (I'm looking at you, users). Luckily, other courses teach you how to test complicated things like, "How do people behave when using the application?" Additionally, other courses teach you how to collect good requirements.

Good requirements will translate easily into testable assumptions. "True of False" is best, but there are other types of questions that are simple to answer in a programmatic way:

- 'It can/cannot be greater/less than x' (boundary testing)

- 'It must allow x' (test-to-pass), or 'it must not allow x' (test-to-fail)

Due diligence

Some questions are too much work to answer, even when automated. If you create a calculator, you don't expect to test every possible combination of numbers. That being said, here's what you might propose to test:

- An input of 0-9 does not throw an error

- An input of 999999 does not throw an error

- An input of -999999 does not throw an error

- 1 + 2 = 3

- -3 + -4 = -7

- 5 * 6 = 30

- 7 * -8 = -56

- 9 - 10 = -1

- -11 - 12 = -23

- 13 - -14 = 27

- 15 / 5 = 3

- 16 / -4 = -4

Properties

Consider the properties of numbers. The questions above are not testing all numbers, but they are testing the following numerical properties:

- Signed/unsigned (positivity or negativity)

- Summable

- Subtractable

- Multipliable

- Divisible

- Multi-digit

What property of numbers was not tested in our list of questions?

What properties do numbers have that are beyond the scope of a calculator (i.e. possible infinite digits)? What is the boundary of the scope? What should happen when that boundary is reached?

If you have carefully considered the properties of what you are testing, and tested properly, you may still end up with an error for one of the values that you didn't test. Say, for example, that a demon possesses the user whenever they enter the number 666.

Later, we'll see why creating a test plan is important - getting the client or management to sign-off on these testing limitations means they've judged these 'unknown unknowns' to be an acceptable risk. People are way more likely to get mad at you if they're surprised.

Unfortunately, you're not always the one gathering the requirements. Let me state for the record: "Most costly errors are a failure in the requirements". False assumptions and misunderstandings are the most likely issues to make it to production, and, as we saw earlier, the later the stage, the bigger the problem. Regardless of who gathered the requirements, though, understanding the requirements is your responsibility.

A list of requirements red flags:

- Always, Never

- Are you absolutely sure of these absolutes?

- Obviously

- Is it, though?

- Usually

- What about when the unusual happens?

- Etc.

- Please, do elaborate...

- If

- Else?

Source(s) of truth

As discussed, requirements can come from multiple places. Even if you understand a requirements document backwards and forwards, you may find one source conflicting with another, i.e. an approved design conflicting with a copy deck, or a styleguide. It's on you to raise this with... well, someone. Someone with the authority to tell you what to consider the "source of truth" - the requirements that override anything that conflicts with it.

Sometimes, no one can tell you. In that case, someone needs to ask the client, or else you're in for a very boring series of meetings.

In summary...

It is your job to understand the requirements. If you don't, ask. If there is conflict, clarify. Know the 'else...', 'etc...', 'must...', and 'cannot...'.

Break the requirements down to their simplest form. Test to equal (true/false) when you can. If that is too costly, test properties. Test boundaries. Test to pass and test to fail.

How do we test?

As discussed, requirements come from a variety of sources. Each type of requirement comes with its own types of testing.

You'll notice that user testing and analytics are absent from the list below. That's because those are types of testing that are focussed on generating requirements. 'Assurance' describes all the processes we follow when meeting requirements.

You also might look at this list and think, 'wait, AM I GONNA HAVE TO DO ALL THIS??'

Nope. As a junior/intermediate developer, you'll want to know how to write your own unit tests, do some ad hoc testing, and some code review. When it comes to things like integration testing, end-to-end testing, etc., a larger team will have that stuff governed by senior devs, devOps managers and QA managers.

A smaller team (or if you're freelancing) will need to have a pared-down process, but will still need a plan for how to test your releases.

Code specifications - Language Features, Linters, Validators & Manual Testing

Computer languages all have features that can be used to enforce code quality - think data types, scope, mutability, strict mode, etc. We'll learn more about some of these features in this course. For now, just know that a strong developer uses language features to create quality, and welcomes errors as their first line of defense.

A validator will read code and check for any violations of the language specification.

Example: w3c html validator Opens in a new window

A linter will validate your code, and additionally flag style errors as well. Typically, you can configure a linter, defining new rules, or ignoring existing rules.

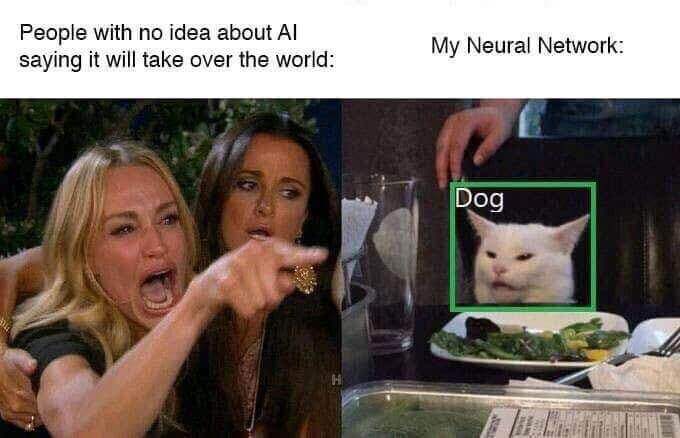

Not all code standards can be captured with automated processes. Standards that are based on intention, like semantics and accessibility, may not be something a computer easily understands (for example, a picture of a dog with the alternative text "a cat" would violate accessibility standards, but this violation is not easily identified by an automated process). In some cases, manual verification is required.

In other words, a computer doesn't understand your intentions, only what you tell it in computer language. You can only automate what a computer understands.

Team standards - Code Review

When a measure becomes a target, it ceases to be a good measure. Goodhart's law

Team standards may be enforced through automated testing, but the most important tool in code-sharing is the code review.

Simply, when you want to merge the code you've been working on into the larger codebase, you ask someone else on the team to read it. If they understand it, and feel it meets the team's standards, it can be merged.

This is a 'safety net'. If your code is too complex (i.e. too poorly commented) for another team member to understand, how will they work with it? Maybe you accidentally (or otherwise) 'gamed' the automated testing. Your teammates will call you out - and that's a good thing.

User & client requirements - the kitchen sink, et al.

In the words of the Legendary Stardust Cowboy, this is where the rubber hits the road.

You have your requirements, and you're ready to start coding. If you're under a tight deadline, and/or writing low-complexity code, you may simply write you code and perform some ad hoc testing. If you're doing anything more complex, you'll want to write unit tests.

Ad hoc testing

Ad hoc testing is the bare minimum, seat-of-your-pants testing that you're already doing. You know you need to do a thing, you open your browser to see if the thing works.

Here are some ad hoc testing tools that I use regularly:

Developer Tools

var hw = "Hello World";

console.log(hw);Inspecting elements is classic ad hoc testing. Are things rendering on the page as intended? What are their CSS properties?

Modern dev tools provide a wealth of data (which we'll do a deep dive on shortly). The Lighthouse and axe Opens in a new window auditing tools are invaluable for quickly identifying opportunities to improve load times, SEO and accessibility. Device emulation and responsive testing make checking your CSS breakpoints really easy (but don't be fooled - I've run into bugs on multiple occasions where the emulators didn't match the real devices' behaviour).

Link checking

Checking that there are no broken links on your page is a great "sanity test". A "sanity test" (is this term problematic? it kinda feels problematic, but it is a common industry term... I dunno) is checking whether something can be true. Just because there are no broken links doesn't mean all your links point to the right place, but broken links are never correct.

Link Checker Opens in a new window is a great Chrome plugin for verifying that the links on your page aren't broken.

Input testing

It's fine to manually test form inputs - just don't forget to try navigating your forms with your keyboard as well as your mouse. Don't forget about allowing for whitespace (postal code fields, I'm looking at you), the wonderful variety of names Opens in a new window people have, how you probably shouldn't regex emails Opens in a new window, etc. etc.

If you want to add a degree of automation to your ad hoc testing, I really love the browser automation tool UI Vision Opens in a new window. I hesitate to recommend it simply because I don't see it discussed much, but it really has cut many hours of work out of my jobs, or at least saved me some carpal tunnel.

It can automate things like button clicks, navigation, and typing in your browser, it can loop and read variable values from a spreadsheet, etc. It's not as "set-it-and-forget-it" as headless browser automation (where a tool opens an 'invisible' browser and mimics a user), but I love it for working with bad websites - I put my browser on autopilot, but I can intervene if anything goes wrong.

Unit testing

This is a unit test.

// It should add numbers

var addStuff = function(firstNumber, secondNumber) {

return firstNumber + secondNumber;

};

// Test it

var testAddStuff = function() {

if (typeof addStuff(1, 2) === 'number') {

return true;

} else {

return false;

}

}

// Returns true

console.log(testAddStuff());...but this is not how unit testing is done.

Unit testing is typically done by importing a file into a test file, using a testing framework/assertion library to write tests simply, and using Node to run the test. Don't worry! We're going to learn how to do all three of these things. It's going to look something like this:

/add-stuff.js

var addStuff = function(firstNumber, secondNumber) {

return firstNumber + secondNumber;

};

module.exports = addStuff;/test.js

var expect = require("chai").expect;

var addStuff = require("./add-stuff.js");

it("returns a number", function() {

expect(addStuff(1, 2)).to.be.a('number');

});

// ✓ returns a number

// 1 passing (5ms)Like I said, we've got a few concepts to learn in order to implement this, but hopefully you're excited about how intuitive it is to write

expect(beverages)to.have.property('tea').with.lengthOf(3);What to unit test

At this point, you're probably asking the question, "Do I have to test if every function that returns a number actually returns a number??"

The answer is no, unless it will help.

The point of testing is to save you time, as well as to mitigate risk. So, you need to ask the questions:

- How long will it take to write the test? and

- How long will it take to test this manually?

If the answer to the first question is "2 minutes", and the answer to the second question is "1 minute", then testing manually twice means you should've just written the unit test. You can expect to write fewer tests as you get more comfortable with programming.

Also consider the fact that testing helps you organize your thinking. Testing is the practice of explicitly stating your assumptions. This is a good practice in life, and coding, too!

Which brings us to...

Test-driven development

Test-driven development (or 'TDD') is the practice of writing your tests before you write the thing you're testing.

First we write this...

/test.js

var expect = require("chai").expect;

var addStuff = require("./add-stuff.js");

it("returns a number", function() {

expect(addStuff(1, 2)).to.be.a('number');

});

// 0 passing (5ms)

// 1 failing

//

// 1) returns a number:

// TypeError: addStuff is not a function

// at Context.<anonymous> (test/test.js:4:10)...and then we write this...

/add-stuff.js

var addStuff = function(firstNumber, secondNumber) {

return firstNumber + secondNumber;

};

module.exports = addStuff;

// Now our test returns

// ✓ returns a number

// 1 passing (5ms)Continuous Integration

Whether you're working solo or on a team, hopefully you're taking advantage of a version control system like git. Continous Integration (commonly abbreviated 'CI') is the practice of pushing your code to the shared codebase frequently (minimum once per day).

If someone else's code doesn't play nicely with yours (or vice versa), this will quickly become obvious, and should be simple to address. It also means you're communicating with your teammates frequently, which is nice!

When you attempt to merge into the main branch, this will trigger a number of tests. These tests tend to be bulkier and slower, maybe taking minutes (compared to milliseconds for your units tests). This includes your unit tests, naturally, but also a set of tests meant to look at the application as a whole.

Deployment lifecycle

Typically, your application will be live in a few different places. You've got a copy on your local machine, of course, and then there's the development server where your code gets integrated with that of other developers.

There's probably a version of the application for Quality Assurance testers to run their tests. Once code passes the QA team's tests, this more 'polished' version of the application goes on to a 'UAT' server, where clients can have a look at the code before it goes live.

All of this, at least on a larger team, is usually governed either by a well-thought-out process, or, ideally, by "DevOps managers", who build a whole automated pipeline to handle the chain of deployments.

The point of all this is to filter out bugs. You don't want to take up other developers' time with syntax errors, QA testers' time with integration errors, the client's time with missed requirements, and you certainly don't want to show public users any of the above.

It should be noted that when I use the term 'server' here, this could mean a number of things - from physical machines, to virtual machines, all the way to clusters of containers. The effect is the same - progressively more 'high quality' versions of the application to be tested at higher levels before reaching the end-user.

All of this, at least on a larger team, is usually governed either by a well-thought-out process, or, ideally, by "DevOps managers", who build a whole automated pipeline to handle the chain of deployments.

The point of all this is to filter out bugs. You don't want to take up other developers' time with syntax errors, QA testers' time with integration errors, the client's time with missed requirements, and you certainly don't want to show public users any of the above.

It should be noted that when I use the term 'server' here, this could mean a number of things - from physical machines, to virtual machines, all the way to clusters of containers. The effect is the same - progressively more 'high quality' versions of the application to be tested at higher levels before reaching the end-user.

Integration testing

Integration tests check to make sure that your code can interact with others' code without breaking anything, and, if it's meant to work together, produces the expected results. It may also test your code integrating with outside data sources like a database or an API, testing the expected "data flow".

Despite the larger scope of work, it's usually written with the same testing framework as you unit tests.

End-to-end testing

Whereas integration testing is like unit testing with a broader scope, end-to-end testing (E2E) examines your code from an entirely different perspective. End-to-end testing pretends to be a user and 'acts out' user stories.

This is typically done with a 'headless browser', which runs your application in a browser (that you can't see), clicking stuff, navigating, and filling out forms while taking screenshots and/or video, and collecting other data including reporting on whether custom E2E test conditions succeeded or failed. This may include metrics for "performance testing", which reports on how quickly your application loads and performs user interactions.

Load testing

Load testing is a subset of end-to-end testing. It tests user stories with multiple simulated users simultaneously. This can test performance when a bunch of users are logged on, or it can test assumptions about what happens on your website when multiple people interact, like, "What happens when multiple people put the last of an item in their shopping cart?"

Stress testing

Stress testing is similar to load testing in that it simulates multiple users. However, stress testing deliberately overloads your application to answer questions like, "How many users is too many?", or, "Do users get our custom error message if we get DDoS'd?"

Manual testing

Device emulation, as offered in your developer tools or end-to-end testing automation, is pretty good. In my experience, however, it's possible for it to miss things when it comes to device-specific layout quirks (particularly if the client wants to support things like Blackberrys), as well as gestures like touch or swipe.

Commonly in larger shops, a QA tester will run through user stories on real devices. Even if you're developing solo, it can be a good practice to test things out on whatever real devices you have handy.

Penetration testing

Penetration testing or 'pentesting' is benign hacking by security experts employed by you or your client to try and subvert your security measures. Hopefully you've already tested your security requirements in your integration/E2E testing!

User acceptance testing

In a well-managed environment, this is the point at which you demonstrate to the client that all their requirements have been met. They fool around with it a bit, sign off on the release, and it can be deployed to the end user.

In a poorly managed environment, the client will catch missed requirements, either because those requirements weren't documented properly, weren't passed along properly, or because they changed their mind and they're too valuable (and volatile) of a client to contradict.

Post-Deployment

When the application or feature has gone public, testing doesn't stop there. DevOps monitors the site for performance changes, availability, crashes, and proper API responses.

Summary

Whew! That was a lot! Just to review:

- We test because:

- Then we don't have to remember everything

- It's easier than testing things manually

- The further a bug gets, the more expensive it is to fix

- It's the price of code re-use

- It proves our assumptions

- You can automate testing for:

- A binary, easily

- A boundary

- Only things a computer understands

- If you can't test all possibilities, test properties

- Most errors that stick around are errors in the requirements (and it's your job to clarify)

- Testing is a team effort

- We test code and team standards with:

- Languages features

- Validators (the "letter of the law") & linters (the "spirit of the law")

- Code review (for happy teammates)

- We test client requirements with:

- Ad hoc testing (dev tools, link checkers, whatever we can get our hands on)

- Unit testing (and we call it TDD because we start with the test)

- The team's CI workflow, including integration testing, E2E testing, load testing, manual device testing, and maybe pen testing and/or stress testing

- You can automate testing for:

- A binary, easily

- A boundary

- Only things a computer understands

- If you can't test all possibilities, test properties

- Most errors that stick around are errors in the requirements (and it's your job to clarify)

- Testing is a team effort

- We test code and team standards with:

- Language features

- Validators (the "letter of the law") & linters (the "spirit of the law")

- Code review (for happy teammates)

- We test client requirements with:

- Ad hoc testing (dev tools, link checkers, whatever we can get our hands on)

- Unit testing (and we call it TDD because we start with the test)

- The team's CI workflow, including integration testing, E2E testing, load testing, manual device testing, and maybe pen testing and/or stress testing

- 1. Table of Contents

- 2. What is 'Quality'?When making any kind o

- 3. Client requirementsWhat the client wants.User requirementsWhat the user needs.StandardsWhat the language specification says.Team requirementsWhat the team wants.

- 4. Client requirements

- 5. Hopefully you have a manager who can translate client requirements into technical requirements, so that requirements like, "brand synergy" become actionable items, like "team photos on the 'About Us' page".

- 6. When your code is ready for a "release", there is usually a phase of testing called "user acceptance testing". This may involve actual users, but most often this is "client acceptance", meaning the client gets to test the release.

- 7. User requirements

- 8. User requirements come from user testing. This could come from observing users (usability testing), interviewing users (user experience testing), or digital tracking of user behaviour (analytics).

- 9. Beyond the ask: Security & Standards.You

- 10. SecurityKeeping user data from unauthorized access, making sure the application keeps working, and keeping the application free from unauthorized changes are requirements often left unspoken by clients and users alike, but they are the responsibility of the people engineering the application.StandardsSticking to language specifications, like those created by WHATWG & the W3C Opens in a new window (HTML, CSS & accessibility) and ECMA International Opens in a new window (JavaScript), mean that your application is accessible for people with disabilities, and future-proofed to work on devices that haven't even been conceived of yet.

- 11. Team Requirements

- 12. Team requirements might be things like code naming conventions, formatting, commenting conventions, version control workflow, design patterns, testing strategies, etc.

- 13. All you need to know at this point is that every high-functioning team, big or small, has at least some agreed-upon patterns when writing, storing and/or deploying their code.

- 14. In summary...

- 15. What is 'Assurance'?

- 16. Why do we test?

- 17. Testing to forget

- 18. I have an enduring respect for servers Opens in a new window at restaurants. Many servers can remember a multitude of complicated orders, coupled with the identity of the orderer, and efficiently communicate with the kitchen, all before any actual serving happens.

- 19. The idea here is, the more complicated a task, the greater the need to capture those complications and put them somewhere that doesn't rely on our memory.

- 20. Testing to track

- 21. Application complexity

- 22. This is why we both test the parts of an application individually, and the application as a whole. Testing individually allows us to find bugs in a contained space before they reach the rest of the application. Testing the whole allows us to capture bugs that emerge from clashes between the parts.

- 23. Application maturity

- 24. Re-use

- 25. Now, the obvious benefit of DRY programming is that fixing bugs is quick - fix it once and it's fixed everywhere, no tracking down every instance of an error that was manually copied and pasted into independant pages. The cost of this efficiency is to respect the impact of code re-use. We test early to mitigate the risk of a widely-distributed bug, and we test robustly to account for the variety of contexts in which our code may be used.

- 26. Testing to prove

- 27. Common advice for writing a resume is to list your accomplishments, not just your responsibilities. Creating metrics will generate some feathers for your cap, and can help you advocate for preferred solutions for your team. But the best outcome of testing, in my view, is to minimize guesswork, ego and conflict in the workplace by generating proofs by objectively testing assumptions.

- 28. What do we test?Coding errors happen eve

- 29. However, if you want to test not only whether the computer understands you, but also whether you're explaining your intentions properly, you're going to have to create your own error conditions. This will require some critical thinking.

- 30.

- 31. Some things can be very easy to test in an automated way. Others essentially impossible (I'm looking at you, users). Luckily, other courses teach you how to test complicated things like, "How do people behave when using the application?" Additionally, other courses teach you how to collect good requirements.

- 32. Good requirements will translate easily into testable assumptions. "True of False" is best, but there are other types of questions that are simple to answer in a programmatic way:

- 33. Due diligence

- 34. An input of 0-9 does not throw an errorAn input of 999999 does not throw an errorAn input of -999999 does not throw an error1 + 2 = 3-3 + -4 = -75 * 6 = 307 * -8 = -569 - 10 = -1-11 - 12 = -2313 - -14 = 2715 / 5 = 316 / -4 = -4

- 35. Properties

- 36. If you have carefully considered the properties of what you are testing, and tested properly, you may still end up with an error for one of the values that you didn't test. Say, for example, that a demon possesses the user whenever they enter the number 666.Later, we'll see why creating a test plan is important - getting the client or management to sign-off on these testing limitations means they've judged these 'unknown unknowns' to be an acceptable risk. People are way more likely to get mad at you if they're surprised.

- 37. Unfortunately, you're not always the one gathering the requirements. Let me state for the record: "Most costly errors are a failure in the requirements". False assumptions and misunderstandings are the most likely issues to make it to production, and, as we saw earlier, the later the stage, the bigger the problem. Regardless of who gathered the requirements, though, understanding the requirements is your responsibility.

- 38. A list of requirements red flags:

- 39. Source(s) of truth

- 40. In summary...

- 41. How do we test?As discussed, requirement

- 42. You also might look at this list and think, 'wait, AM I GONNA HAVE TO DO ALL THIS??'Nope. As a junior/intermediate developer, you'll want to know how to write your own unit tests, do some ad hoc testing, and some code review. When it comes to things like integration testing, end-to-end testing, etc., a larger team will have that stuff governed by senior devs, devOps managers and QA managers.A smaller team (or if you're freelancing) will need to have a pared-down process, but will still need a plan for how to test your releases.

- 43. Code specifications - Language Features, Linters, Validators & Manual Testing

- 44. A validator will read code and check for any violations of the language specification.

- 45. Not all code standards can be captured with automated processes. Standards that are based on intention, like semantics and accessibility, may not be something a computer easily understands (for example, a picture of a dog with the alternative text "a cat" would violate accessibility standards, but this violation is not easily identified by an automated process). In some cases, manual verification is required.

- 46. Skynet is on-line. Ish.

- 47. Team standards - Code Review

- 48. Simply, when you want to merge the code you've been working on into the larger codebase, you ask someone else on the team to read it. If they understand it, and feel it meets the team's standards, it can be merged.

- 49. User & client requirements - the kitchen sink, et al.

- 50. Ad hoc testing

- 51. Developer Tools

- 52. Link checking

- 53. Input testing

- 54. If you want to add a degree of automation to your ad hoc testing, I really love the browser automation tool UI Vision Opens in a new window. I hesitate to recommend it simply because I don't see it discussed much, but it really has cut many hours of work out of my jobs, or at least saved me some carpal tunnel.It can automate things like button clicks, navigation, and typing in your browser, it can loop and read variable values from a spreadsheet, etc. It's not as "set-it-and-forget-it" as headless browser automation (where a tool opens an 'invisible' browser and mimics a user), but I love it for working with bad websites - I put my browser on autopilot, but I can intervene if anything goes wrong.

- 55. Unit testing

- 56. Unit testing is typically done by importing a file into a test file, using a testing framework/assertion library to write tests simply, and using Node to run the test. Don't worry! We're going to learn how to do all three of these things. It's going to look something like this:

- 57. /add-stuff.js

- 58. Like I said, we've got a few concepts to learn in order to implement this, but hopefully you're excited about how intuitive it is to write

- 59. What to unit test

- 60. The point of testing is to save you time, as well as to mitigate risk. So, you need to ask the questions:

- 61. Also consider the fact that testing helps you organize your thinking. Testing is the practice of explicitly stating your assumptions. This is a good practice in life, and coding, too!

- 62. Test-driven development

- 63. First we write this...

- 64. ...and then we write this...

- 65. Continuous Integration

- 66. When you attempt to merge into the main branch, this will trigger a number of tests. These tests tend to be bulkier and slower, maybe taking minutes (compared to milliseconds for your units tests). This includes your unit tests, naturally, but also a set of tests meant to look at the application as a whole.

- 67. Deployment lifecycleTypically, your application will be live in a few different places. You've got a copy on your local machine, of course, and then there's the development server where your code gets integrated with that of other developers.There's probably a version of the application for Quality Assurance testers to run their tests. Once code passes the QA team's tests, this more 'polished' version of the application goes on to a 'UAT' server, where clients can have a look at the code before it goes live.All of this, at least on a larger team, is usually governed either by a well-thought-out process, or, ideally, by "DevOps managers", who build a whole automated pipeline to handle the chain of deployments.The point of all this is to filter out bugs. You don't want to take up other developers' time with syntax errors, QA testers' time with integration errors, the client's time with missed requirements, and you certainly don't want to show public users any of the above.It should be noted that when I use the term 'server' here, this could mean a number of things - from physical machines, to virtual machines, all the way to clusters of containers. The effect is the same - progressively more 'high quality' versions of the application to be tested at higher levels before reaching the end-user.

- 68. All of this, at least on a larger team, is usually governed either by a well-thought-out process, or, ideally, by "DevOps managers", who build a whole automated pipeline to handle the chain of deployments.The point of all this is to filter out bugs. You don't want to take up other developers' time with syntax errors, QA testers' time with integration errors, the client's time with missed requirements, and you certainly don't want to show public users any of the above.It should be noted that when I use the term 'server' here, this could mean a number of things - from physical machines, to virtual machines, all the way to clusters of containers. The effect is the same - progressively more 'high quality' versions of the application to be tested at higher levels before reaching the end-user.

- 69. Integration testing

- 70. End-to-end testing

- 71. This, only with Chrome or Firefox.

- 72. Load testing

- 73. Stress testing

- 74. Manual testing

- 75. Device emulation, as offered in your developer tools or end-to-end testing automation, is pretty good. In my experience, however, it's possible for it to miss things when it comes to device-specific layout quirks (particularly if the client wants to support things like Blackberrys), as well as gestures like touch or swipe.

- 76. Penetration testing

- 77. User acceptance testing

- 78. Post-Deployment

- 79. Summary

- 80. You can automate testing for:A binary, easilyA boundaryOnly things a computer understands

- 81. If you can't test all possibilities, test propertiesMost errors that stick around are errors in the requirements (and it's your job to clarify)Testing is a team effort

- 82. We test code and team standards with:Language featuresValidators (the "letter of the law") & linters (the "spirit of the law")Code review (for happy teammates)

- 83. We test client requirements with:Ad hoc testing (dev tools, link checkers, whatever we can get our hands on)Unit testing (and we call it TDD because we start with the test)The team's CI workflow, including integration testing, E2E testing, load testing, manual device testing, and maybe pen testing and/or stress testing